Three Concepts: Probability | Projects

A Bayesian has learned that the coin is biased. A rumour tells that it is weighted towards heads. Not taking rumours too seriously, our Bayesian chooses to model her belief in the heads producing ability of the coin by a Beta(2,1)-distribution. Later, however, after tossing the coin 20 times and getting the sequence TTTTTTHTHHTTTTTTTTHT, our Bayesian is forced to update her belief. What is her probability now for the coin having a tendency to produce heads over 55 percent of the time? Feel free to use the beta calculator to find the answer.

Let us try to exercise some telekinesis. Take a coin. Then decide, before tossing the coin, the outcome of the toss. For instance, decide that the coin will land heads up. Then toss the coin in the usual way so that it flips around several times, just as you would do usually. If the coin indeed lands heads up, you are successful.

Now we may rudely doubt your telekinetic powers, and claim that a single toss was just as likely to fall the way you decided as the other way around. You must repeat your trial several times, say 10 times. We will then compare two models: In the first one, both your decisions and the coin flips are independent Bernoulli random variables with an unknown bias. In the second model, your decisions are Bernoulli random variables, independent of each other, but the outcomes of the coin tosses depend on your decisions. In terms of Bayesian networks, these two models correspond to the empty network (with no arcs), and the connected network with an arc between the decision node and the outcome. Assume a uniform prior for all parameters in the model and the two models.

Report the posterior probabilities of the two models after one, two, ..., ten coin flips. Hint: Recall that you can obtain the model posterior via Bayes' rule from the marginal likelihood of the observed sequence. In calculating the marginal likelihood of the observed sequence you will only need to repeatedly use Laplace's rule.

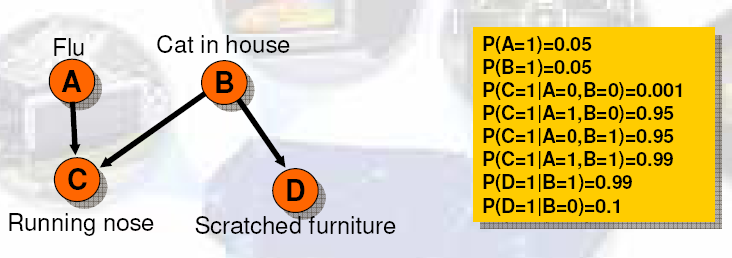

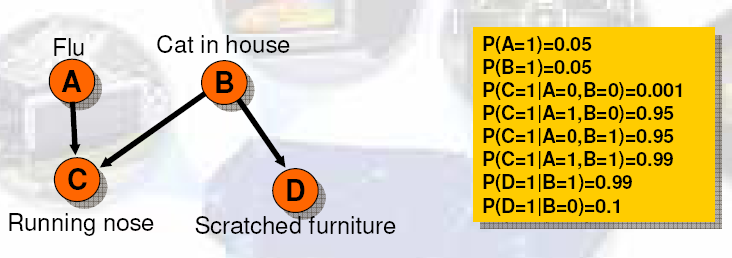

Use the "Cat network" discussed in the lectures (see below). Do the

following inferences by, for instance, pencil and paper:

a.

Evaluate the maximum a posteriori (MAP) configuration in the "Cat network"

of the lectures given C=1 ("Running nose").

b.

Evaluate the probability of A=1 ("Flu") given C=1.

c. Find the joint posterior distribution of variables B ("Cat

in house") and C, given D=1 ("Scratched furniture"). (You should

obtain a 2 by 2 table with probabilities summing to one.)

| Three Concepts: Probability | |